publications

(*) denotes equal contribution

2026

-

Raster2Seq: A Unified Framework for Image-to-Sequence GenerationHao Phung and Hadar Averbuch-Elor2026

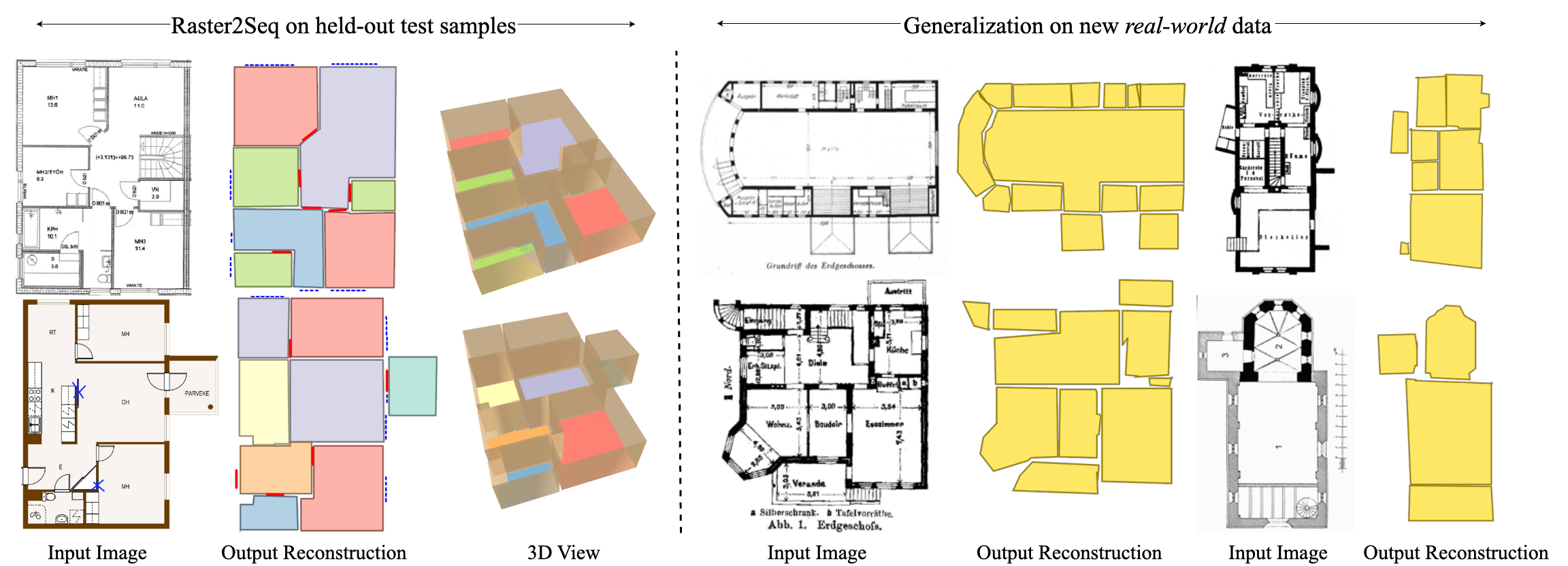

Raster2Seq: A Unified Framework for Image-to-Sequence GenerationHao Phung and Hadar Averbuch-Elor2026Reconstructing a structured vector-graphics representation from a rasterized floorplan image is typically an important prerequisite for computational tasks involving floorplans such as automated understanding or CAD workflows. However, existing techniques struggle in faithfully generating the structure and semantics conveyed by complex floorplans that depict large indoor spaces with many rooms and a varying numbers of polygon corners. To this end, we propose Raster2Seq, framing floorplan reconstruction as a sequence-to-sequence task in which floorplan elements–such as rooms, windows, and doors–are represented as labeled polygon sequences that jointly encode geometry and semantics. Our approach introduces an autoregressive decoder that learns to predict the next corner conditioned on image features and previously generated corners using guidance from learnable anchors. These anchors represent spatial coordinates in image space, hence allowing for effectively directing the attention mechanism to focus on informative image regions. By embracing the autoregressive mechanism, our method offers flexibility in the output format, enabling for efficiently handling complex floorplans with numerous rooms and diverse polygon structures. Our method achieves state-of-the-art performance on standard benchmarks such as Structure3D, CubiCasa5K, and Raster2Graph, while also demonstrating strong generalization to more challenging datasets like WAFFLE, which contain diverse room structures and complex geometric variations.

@article{phung2026raster2seq, title = {Raster2Seq: A Unified Framework for Image-to-Sequence Generation}, author = {Phung, Hao and Averbuch-Elor, Hadar}, year = {2026}, published = {false}, } - HyperCT: Low-Rank Hypernet for Unified Chest CT AnalysisFengbei Liu, Sunwoo Kwak, Hao Phung, Nusrat Binta Nizam, Ilan Richter, Nir Uriel, Hadar Averbuch-Elor, Deborah Estrin, and Mert R. SabuncuIn Medical Imaging with Deep Learning, 2026

Non-contrast chest CTs offer a rich opportunity for both conventional pulmonary and opportunistic extra-pulmonary screening. While Multi-Task Learning (MTL) can unify these diverse tasks, standard hard-parameter sharing approaches are often suboptimal for modeling distinct pathologies. We propose HyperCT, a framework that dynamically adapts a Vision Transformer backbone via a Hypernetwork. To ensure computational efficiency, we integrate Low-Rank Adaptation (LoRA), allowing the model to regress task-specific low-rank weight updates rather than full parameters. Validated on a large-scale dataset of radiological and cardiological tasks, HyperCT outperforms various strong baselines, offering a unified, parameter-efficient solution for holistic patient assessment. Our code will be made publicly available.

@inproceedings{liu2026hyperct, booktitle = {Medical Imaging with Deep Learning}, title = {Hyper{CT}: Low-Rank Hypernet for Unified Chest {CT} Analysis}, author = {Liu, Fengbei and Kwak, Sunwoo and Phung, Hao and Nizam, Nusrat Binta and Richter, Ilan and Uriel, Nir and Averbuch-Elor, Hadar and Estrin, Deborah and Sabuncu, Mert R.}, year = {2026}, published = {true}, }

2025

- Encoder-Decoder Diffusion Language Models for Efficient Training and InferenceMarianne Arriola, Yair Schiff, Hao Phung, Aaron Gokaslan, and Volodymyr KuleshovIn The Thirty-ninth Annual Conference on Neural Information Processing Systems, 2025

Discrete diffusion models enable parallel token sampling for faster inference than autoregressive approaches. However, prior diffusion models use a decoder-only architecture, which requires sampling algorithms that invoke the full network at every denoising step and incur high computational cost. Our key insight is that discrete diffusion models perform two types of computation: 1) representing clean tokens and 2) denoising corrupted tokens, which enables us to use separate modules for each task. We propose an encoder-decoder architecture to accelerate discrete diffusion inference, which relies on an encoder to represent clean tokens and a lightweight decoder to iteratively refine a noised sequence. We also show that this architecture enables faster training of block diffusion models, which partition sequences into blocks for better quality and are commonly used in diffusion language model inference. We introduce a framework for Efficient Encoder-Decoder Diffusion (E2D2), consisting of an architecture with specialized training and sampling algorithms, and we show that E2D2 achieves superior trade-offs between generation quality and inference throughput on summarization, translation, and mathematical reasoning tasks.

@inproceedings{arriola2025e2d2, booktitle = {The Thirty-ninth Annual Conference on Neural Information Processing Systems}, title = {Encoder-Decoder Diffusion Language Models for Efficient Training and Inference}, author = {Arriola, Marianne and Schiff, Yair and Phung, Hao and Gokaslan, Aaron and Kuleshov, Volodymyr}, year = {2025}, published = {true}, page = {https://m-arriola.com/e2d2/}, } - Simple Guidance Mechanisms for Discrete Diffusion ModelsYair Schiff*, Subham Sekhar Sahoo*, Hao Phung*, Guanghan Wang*, Sam Boshar, Hugo Dalla-torre, Bernardo P Almeida, Alexander Rush, Thomas Pierrot, and Volodymyr KuleshovIn The Thirteenth International Conference on Learning Representations, 2025

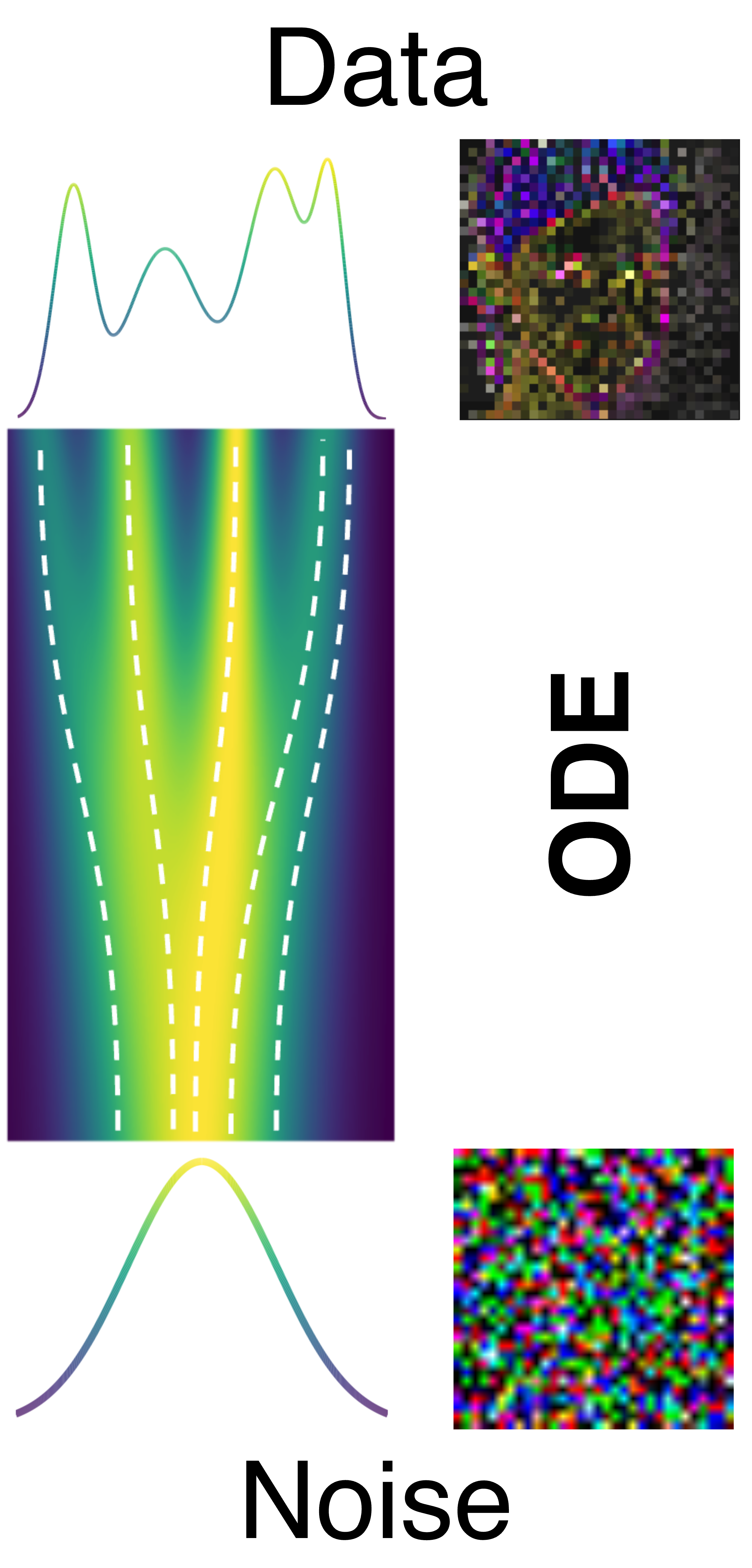

Diffusion models for continuous data gained widespread adoption owing to their high quality generation and control mechanisms. However, controllable diffusion on discrete data faces challenges given that continuous guidance methods do not directly apply to discrete diffusion. Here, we provide a straightforward derivation of classifier-free and classifier-based guidance for discrete diffusion, as well as a new class of diffusion models that leverage uniform noise and that are more guidable because they can continuously edit their outputs. We improve the quality of these models with a novel continuous-time variational lower bound that yields state-of-the-art performance, especially in settings involving guidance or fast generation. Empirically, we demonstrate that our guidance mechanisms combined with uniform noise diffusion improve controllable generation relative to autoregressive and diffusion baselines on several discrete data domains, including genomic sequences, small molecule design, and discretized image generation. Code is available at https://github.com/kuleshov-group/discrete-diffusion-guidance.

@inproceedings{schiff2024discreteguidance, booktitle = {The Thirteenth International Conference on Learning Representations}, title = {Simple Guidance Mechanisms for Discrete Diffusion Models}, author = {Schiff, Yair and Sahoo, Subham Sekhar and Phung, Hao and Wang, Guanghan and Boshar, Sam and Dalla-torre, Hugo and de Almeida, Bernardo P and Rush, Alexander and Pierrot, Thomas and Kuleshov, Volodymyr}, year = {2025}, published = {true}, page = {https://discrete-diffusion-guidance.github.io/}, } - Self-Corrected Flow Distillation for Consistent One-Step and Few-Step Image GenerationQuan Dao*, Hao Phung*, Trung Dao, Dimitris N. Metaxas, and Anh TranIn The 39th Annual AAAI Conference on Artificial Intelligence (AAAI), 2025

Flow matching has emerged as a promising framework for training generative models, demonstrating impressive empirical performance while offering relative ease of training compared to diffusion-based models. However, this method still requires numerous function evaluations in the sampling process. To address these limitations, we introduce a self-corrected flow distillation method that effectively integrates consistency models and adversarial training within the flow-matching framework. This work is a pioneer in achieving consistent generation quality in both few-step and one-step sampling. Our extensive experiments validate the effectiveness of our method, yielding superior results both quantitatively and qualitatively on CelebA-HQ and zero-shot benchmarks on the COCO dataset. Our implementation is released at https://github.com/VinAIResearch/SCFlow.

@inproceedings{dao2024scflow, title = {Self-Corrected Flow Distillation for Consistent One-Step and Few-Step Image Generation}, author = {Dao, Quan and Phung, Hao and Dao, Trung and Metaxas, Dimitris N. and Tran, Anh}, booktitle = {The 39th Annual AAAI Conference on Artificial Intelligence (AAAI)}, year = {2025}, published = {true}, }

2024

- DiMSUM: Diffusion Mamba - A Scalable and Unified Spatial-Frequency Method for Image GenerationHao Phung*, Quan Dao*, Trung Dao, Hoang Phan, Dimitris N. Metaxas, and Anh TranIn The Thirty-eighth Annual Conference on Neural Information Processing Systems (NeurIPS), 2024

We introduce a novel state-space architecture for diffusion models, effectively harnessing spatial and frequency information to enhance the inductive bias towards local features in input images for image generation tasks. While state-space networks, including Mamba, a revolutionary advancement in recurrent neural networks, typically scan input sequences from left to right, they face difficulties in designing effective scanning strategies, especially in the processing of image data. Our method demonstrates that integrating wavelet transformation into Mamba enhances the local structure awareness of visual inputs and better captures long-range relations of frequencies by disentangling them into wavelet subbands, representing both low- and high-frequency components. These wavelet-based outputs are then processed and seamlessly fused with the original Mamba outputs through a cross-attention fusion layer, combining both spatial and frequency information to optimize the order awareness of state-space models which is essential for the details and overall quality of image generation. Besides, we introduce a globally-shared transformer to supercharge the performance of Mamba, harnessing its exceptional power to capture global relationships. Through extensive experiments on standard benchmarks, our method demonstrates superior results compared to DiT and DIFFUSSM, achieving faster training convergence and delivering high-quality outputs. The codes and pretrained models are released at https://github.com/VinAIResearch/DiMSUM.

@inproceedings{phung2024dimsum, title = {DiMSUM: Diffusion Mamba - A Scalable and Unified Spatial-Frequency Method for Image Generation}, author = {Phung, Hao and Dao, Quan and Dao, Trung and Phan, Hoang and Metaxas, Dimitris N. and Tran, Anh}, booktitle = {The Thirty-eighth Annual Conference on Neural Information Processing Systems (NeurIPS)}, year = {2024}, published = {true}, page = {https://vinairesearch.github.io/DiMSUM/}, }

2023

-

Flow Matching in Latent SpaceQuan Dao*, Hao Phung*, Binh Nguyen, and Anh TranarXiv preprint arXiv:2307.08698, 2023

Flow Matching in Latent SpaceQuan Dao*, Hao Phung*, Binh Nguyen, and Anh TranarXiv preprint arXiv:2307.08698, 2023Flow matching is a recent framework to train generative models that exhibits impressive empirical performance while being relatively easier to train compared with diffusion-based models. Despite its advantageous properties, prior methods still face the challenges of expensive computing and a large number of function evaluations of off-the-shelf solvers in the pixel space. Furthermore, although latent-based generative methods have shown great success in recent years, this particular model type remains underexplored in this area. In this work, we propose to apply flow matching in the latent spaces of pretrained autoencoders, which offers improved computational efficiency and scalability for high-resolution image synthesis. This enables flow-matching training on constrained computational resources while maintaining their quality and flexibility. Additionally, our work stands as a pioneering contribution in the integration of various conditions into flow matching for conditional generation tasks, including label-conditioned image generation, image inpainting, and semantic-to-image generation. Through extensive experiments, our approach demonstrates its effectiveness in both quantitative and qualitative results on various datasets, such as CelebA-HQ, FFHQ, LSUN Church & Bedroom, and ImageNet. We also provide a theoretical control of the Wasserstein-2 distance between the reconstructed latent flow distribution and true data distribution, showing it is upper-bounded by the latent flow matching objective.

@article{dao2023lfm, title = {Flow Matching in Latent Space}, author = {Dao, Quan and Phung, Hao and Nguyen, Binh and Tran, Anh}, journal = {arXiv preprint arXiv:2307.08698}, year = {2023}, published = {false}, page = {https://vinairesearch.github.io/LFM/}, } - Anti-DreamBooth: Protecting users from personalized text-to-image synthesisThanh Van Le*, Hao Phung*, Thuan Hoang Nguyen*, Quan Dao*, Ngoc Tran, and Anh TranIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2023

Text-to-image diffusion models are nothing but a revolution, allowing anyone, even without design skills, to create realistic images from simple text inputs. With powerful personalization tools like DreamBooth, they can generate images of a specific person just by learning from his/her few reference images. However, when misused, such a powerful and convenient tool can produce fake news or disturbing content targeting any individual victim, posing a severe negative social impact. In this paper, we explore a defense system called Anti-DreamBooth against such malicious use of DreamBooth. The system aims to add subtle noise perturbation to each user’s image before publishing in order to disrupt the generation quality of any DreamBooth model trained on these perturbed images. We investigate a wide range of algorithms for perturbation optimization and extensively evaluate them on two facial datasets over various text-to-image model versions. Despite the complicated formulation of DreamBooth and Diffusion-based text-to-image models, our methods effectively defend users from the malicious use of those models. Their effectiveness withstands even adverse conditions, such as model or prompt/term mismatching between training and testing. Our code will be available at https://github.com/VinAIResearch/Anti-DreamBooth.

@inproceedings{le_etal2023antidreambooth, title = {Anti-DreamBooth: Protecting users from personalized text-to-image synthesis}, author = {Le, Thanh Van and Phung, Hao and Nguyen, Thuan Hoang and Dao, Quan and Tran, Ngoc and Tran, Anh}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}, year = {2023}, published = {true}, page = {https://anti-dreambooth.github.io/}, } - Wavelet Diffusion Models are fast and scalable Image GeneratorsHao Phung*, Quan Dao*, and Anh TranIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023

Diffusion models are rising as a powerful solution for high-fidelity image generation, which exceeds GANs in quality in many circumstances. However, their slow training and inference speed is a huge bottleneck, blocking them from being used in real-time applications. A recent DiffusionGAN method significantly decreases the models’ running time by reducing the number of sampling steps from thousands to several, but their speeds still largely lag behind the GAN counterparts. This paper aims to reduce the speed gap by proposing a novel wavelet-based diffusion scheme. We extract low-and-high frequency components from both image and feature levels via wavelet decomposition and adaptively handle these components for faster processing while maintaining good generation quality. Furthermore, we propose to use a reconstruction term, which effectively boosts the model training convergence. Experimental results on CelebA-HQ, CIFAR-10, LSUN-Church, and STL-10 datasets prove our solution is a stepping-stone to offering real-time and high-fidelity diffusion models. Our code and pre-trained checkpoints will be available at https://github.com/VinAIResearch/WaveDiff.git.

@inproceedings{phung2023wavelet, title = {Wavelet Diffusion Models are fast and scalable Image Generators}, author = {Phung, Hao and Dao, Quan and Tran, Anh}, booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2023}, published = {true}, pages = {10199-10208}, }

2020

- NICSVQASTO: Visual Question Answering System for Action Surveillance based on Task OntologyHuy Quoc Vo*, Tien-Hao Phung*, and Ngoc Quoc LyIn 2020 7th NAFOSTED Conference on Information and Computer Science (NICS), 2020

Question answering (QA) is a popular research topic for its applications in reality. In advance, there are Visual Question Answering (VQA) researches that aim to combine visual and textual information for question answering. Their drawback is the dependence of learning models, which impedes human intervention and interpretation. To the best of our knowledge, most of them concentrate on the general problem or some specific contexts but no one puts the QA system under action surveillance context. In this paper, we propose a QA system based on Task Ontology which is mainly responsible for mapping from a question sentence to corresponding tasks carried out to reach the appropriate answer. The advantages of task ontology are the adoption of human knowledge to solve a specific problem and the reusability. The performance of the system thus heavily depends on subtasks/models. In our scope, we focus on two main subtasks: Pose estimation/tracking and Skeleton-based action recognition. Besides, we give some enhancements to improve the time efficiency of Pose estimation/tracking and propose a new spatial-temporal feature based on drawing skeleton sequence to image for skeleton-based action recognition of videos in the wild. This method, to some extent, can overcome the challenge of bad-shape pose/skeleton produced by Pose estimation on real-world videos. The hard part of Action recognition in VQASTO is that it has to get input from Pose estimation and Pose tracking which is markedly different from having available good skeletons and merely do recognition.

@inproceedings{vo2020vqasto, author = {Vo, Huy Quoc and Phung, Tien-Hao and Ly, Ngoc Quoc}, booktitle = {2020 7th NAFOSTED Conference on Information and Computer Science (NICS)}, title = {VQASTO: Visual Question Answering System for Action Surveillance based on Task Ontology}, year = {2020}, volume = {}, number = {}, pages = {273-279}, doi = {10.1109/NICS51282.2020.9335891}, published = {true}, }